Using Ansible to build a high availablity Nzbget usenet downloader

I’m limited to about 80MB/s on downloads on my VPC at Digital Ocean, but I run Nzbget for downloading large files from usenet. It doesn’t take long to download at all, but out of curiosity I wanted to see if I could parallelize this and download multiple files at the same. I use Sonarr for searching usenet for freely distributable training videos which then sends them to NZBget for downloading. Since Sonarr can send multiple files to nzbget which get queued up, I figured I can reduce the queue by downloading them at the same time.

Using Ansible and Terraform (devops automation tools), I can spin up VPC on demand, provision them, configure them as nzbget download nodes and then destroy the instances when complete.

The instances all run the same nzbget config and the instances use haproxy for round-robin distribution. I will probably change this to Consul, but I just wanted something quick so I used a basic haproxy config.

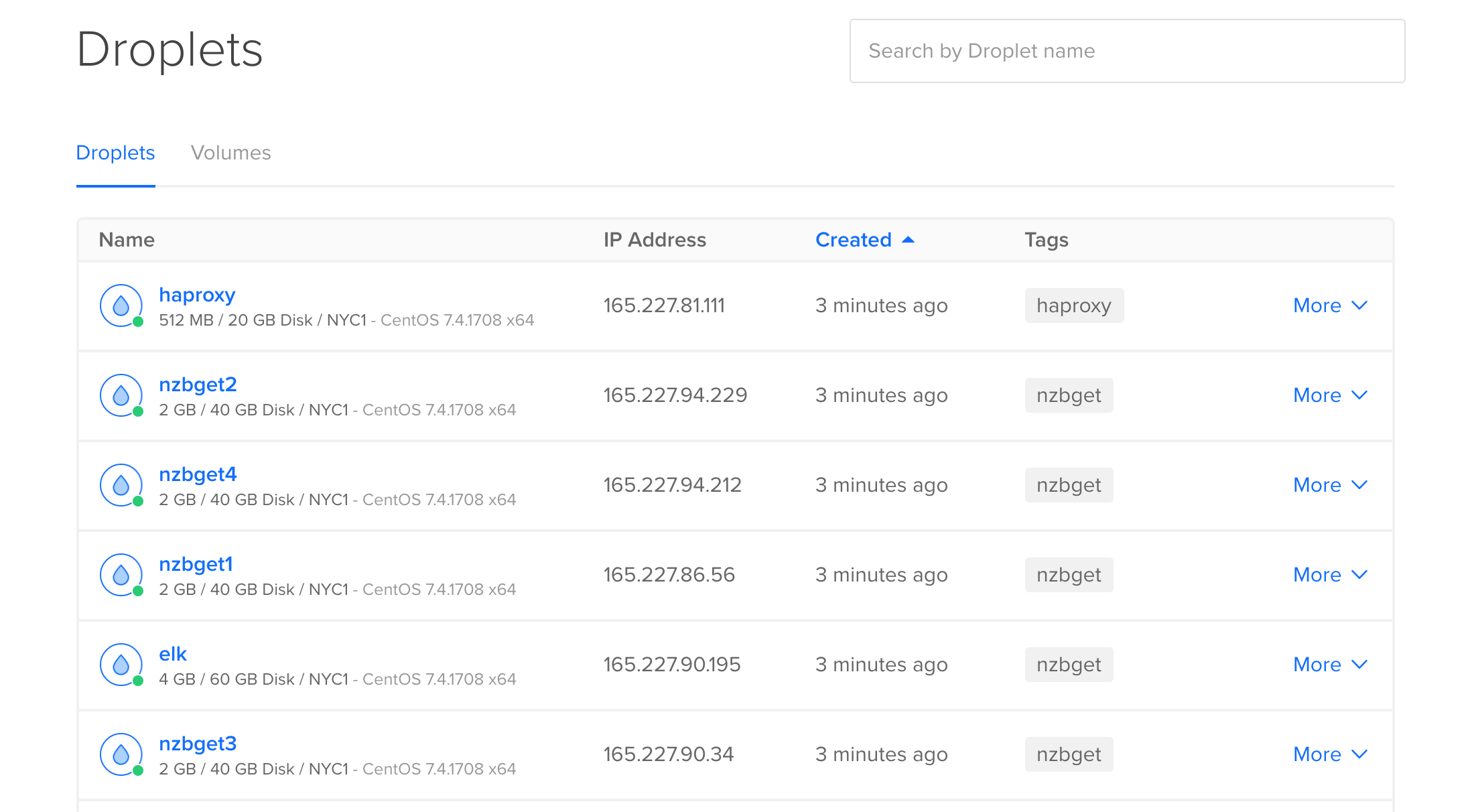

Terraform builds 4 nzbget, 1 haproxy, and 1 ELK instance. It configures a VIP which I point Sonarr to. Here’s the terraform config that builds an nzbget server:

1 | resource "digitalocean_droplet" "nzbget1" {s |

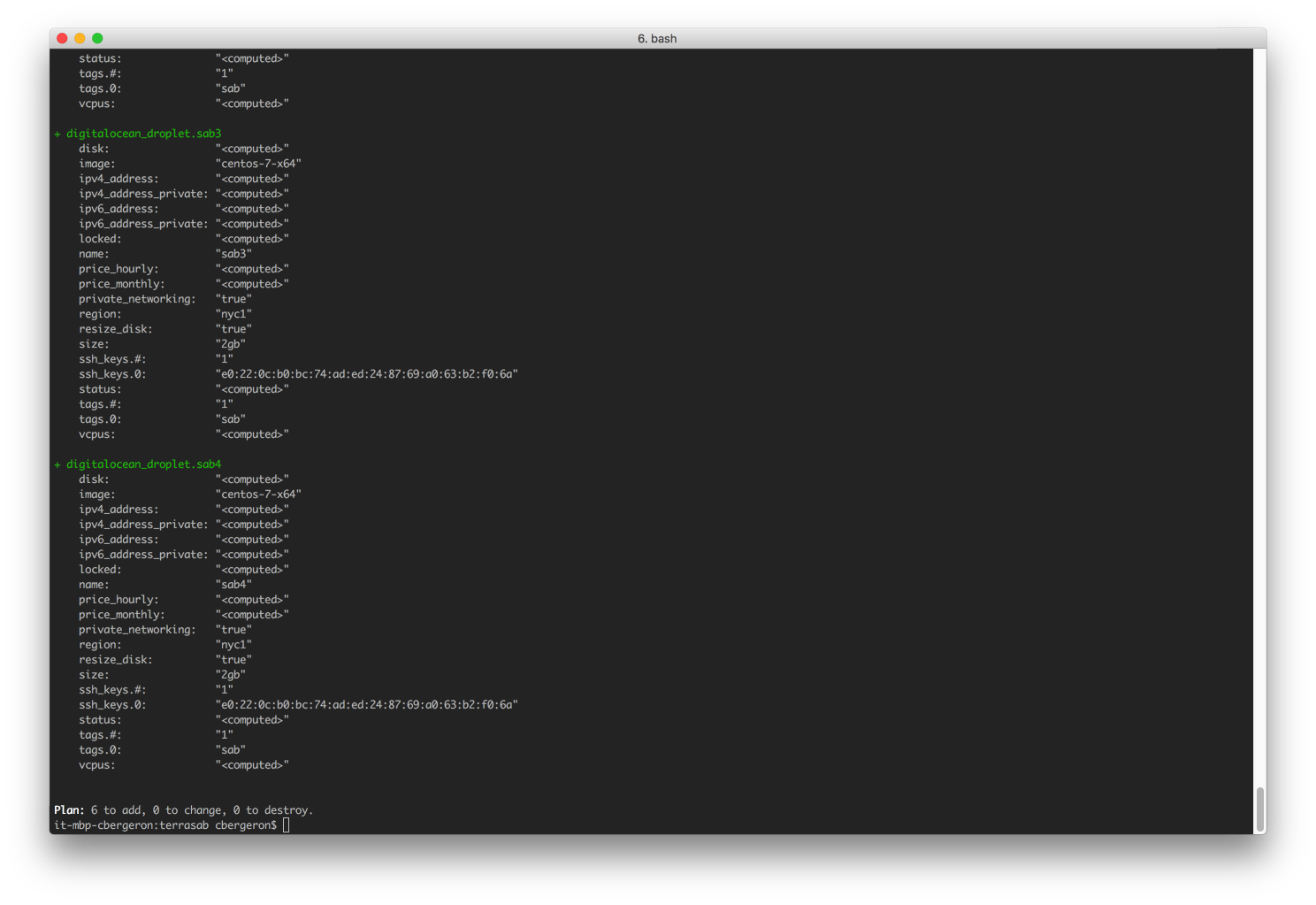

Here is a terraform play to provision 6 new hosts (1 Elasticsearch, 1 HAproxy and 4 Nzbget nodes):

I run a script which takes the IPs/node names from the terraform output and updates my local /etc/hosts file, my ansible hosts file, the haproxy.cfg.j2 ansible template and my ssh_config file (optional, for convenience).

Secrets, API keys and Passwords

I keep my API keys and passwords in my ansible vault. This keeps them AES encryped but still allows Ansible to put them into the config file templates for nzbget. The API keys are for the Usenet providers and the passwords are for accessing the Web UI.

Here is the ansible playbook that configuress the nzbget instances:

1 | #!/usr/bin/env ansible-playbook |

Next steps

The next part of this post will be automatically provisioning a Digital Ocean volume and setting it up as an NFS share. Then I can mount the volume on all of the nzbget nodes for centralized storage. Since I pay by the minute for the VPC instances, I want to be able to quickly provision them, download as much as I can as quickly as I can and then destroy the infrastructure. I’ll keep the volume around, but I can destroy the nzbget downloaders once they’ve finished their queues.

Technology used:

|  |  |  |  |

Using Ansible to build a high availablity Nzbget usenet downloader

https://chrisbergeron.com/2017/10/29/high_performance_nzbget/